-->

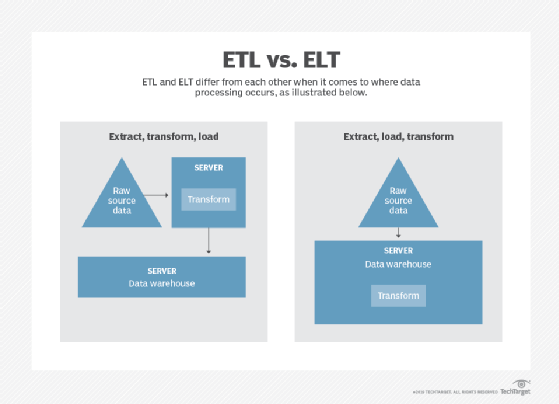

Traditional SMP dedicated SQL pools use an Extract, Transform, and Load (ETL) process for loading data. Synapse SQL, within Azure Synapse Analytics, uses distributed query processing architecture that takes advantage of the scalability and flexibility of compute and storage resources.

Using an Extract, Load, and Transform (ELT) process leverages built-in distributed query processing capabilities and eliminates the resources needed for data transformation prior to loading.

While dedicated SQL pools support many loading methods, including popular SQL Server options such as bcp and the SqlBulkCopy API, the fastest and most scalable way to load data is through PolyBase external tables and the COPY statement.

Kannad's latest Ameri-Fit version of its Integra 406 ELT is designed for an easy replacement 'Now, with the latest ELT we are able to treat the veins with this minimally invasive painless procedure that can be done as an outpatient surgery. Hospital offers laser treatment for varicose veins. With the ELT Program, DMV keeps California Certificates of Title in an electronic format in our database (in place of paper titles). Participating lienholders must then either become an ELT service provider or contract with one of DMV’s approved ELT service providers to transmit vehicle and title data. Keep me signed in: Copyright© 2021 Title Technologies, Inc. Contact Us Title Technologies, Inc. Kannad's latest Ameri-Fit version of its Integra 406 ELT is designed for an easy replacement 'Now, with the latest ELT we are able to treat the veins with this minimally invasive painless procedure that can be done as an outpatient surgery. Hospital offers laser treatment for varicose veins. To register for Electronic Lien & Title service with USA ELT please complete the form below. All businesses that finance vehicles, vessels and mobile homes (banks, credit unions, finance companies, buy here pay here dealers, and any other dealers) are encouraged, and in some states required, to register for ELT service.

With PolyBase and the COPY statement, you can access external data stored in Azure Blob storage or Azure Data Lake Store via the T-SQL language. For the most flexibility when loading, we recommend using the COPY statement.

What is ELT?

Extract, Load, and Transform (ELT) is a process by which data is extracted from a source system, loaded into a dedicated SQL pool, and then transformed.

The basic steps for implementing ELT are:

- Extract the source data into text files.

- Land the data into Azure Blob storage or Azure Data Lake Store.

- Prepare the data for loading.

- Load the data into staging tables with PolyBase or the COPY command.

- Transform the data.

- Insert the data into production tables.

For a loading tutorial, see loading data from Azure blob storage.

1. Extract the source data into text files

Getting data out of your source system depends on the storage location. The goal is to move the data into supported delimited text or CSV files.

Supported file formats

With PolyBase and the COPY statement, you can load data from UTF-8 and UTF-16 encoded delimited text or CSV files. In addition to delimited text or CSV files, it loads from the Hadoop file formats such as ORC and Parquet. PolyBase and the COPY statement can also load data from Gzip and Snappy compressed files.

Extended ASCII, fixed-width format, and nested formats such as WinZip or XML aren't supported. If you're exporting from SQL Server, you can use the bcp command-line tool to export the data into delimited text files.

2. Land the data into Azure Blob storage or Azure Data Lake Store

To land the data in Azure storage, you can move it to Azure Blob storage or Azure Data Lake Store Gen2. In either location, the data should be stored in text files. PolyBase and the COPY statement can load from either location.

Tools and services you can use to move data to Azure Storage:

- Azure ExpressRoute service enhances network throughput, performance, and predictability. ExpressRoute is a service that routes your data through a dedicated private connection to Azure. ExpressRoute connections do not route data through the public internet. The connections offer more reliability, faster speeds, lower latencies, and higher security than typical connections over the public internet.

- AZCopy utility moves data to Azure Storage over the public internet. This works if your data sizes are less than 10 TB. To perform loads on a regular basis with AZCopy, test the network speed to see if it is acceptable.

- Azure Data Factory (ADF) has a gateway that you can install on your local server. Then you can create a pipeline to move data from your local server up to Azure Storage. To use Data Factory with dedicated SQL pools, see Loading data for dedicated SQL pools.

3. Prepare the data for loading

You might need to prepare and clean the data in your storage account before loading. Data preparation can be performed while your data is in the source, as you export the data to text files, or after the data is in Azure Storage. It is easiest to work with the data as early in the process as possible.

Define the tables

You must first defined the table(s) you are loading to in your dedicated SQL pool when using the COPY statement.

If you are using PolyBase, you need to define external tables in your dedicated SQL pool before loading. PolyBase uses external tables to define and access the data in Azure Storage. An external table is similar to a database view. The external table contains the table schema and points to data that is stored outside the dedicated SQL pool.

Defining external tables involves specifying the data source, the format of the text files, and the table definitions. T-SQL syntax reference articles that you will need are:

Use the following SQL data type mapping when loading Parquet files:

| Parquet type | Parquet logical type (annotation) | SQL data type |

|---|---|---|

| BOOLEAN | bit | |

| BINARY / BYTE_ARRAY | varbinary | |

| DOUBLE | float | |

| FLOAT | real | |

| INT32 | int | |

| INT64 | bigint | |

| INT96 | datetime2 | |

| FIXED_LEN_BYTE_ARRAY | binary | |

| BINARY | UTF8 | nvarchar |

| BINARY | STRING | nvarchar |

| BINARY | ENUM | nvarchar |

| BINARY | UUID | uniqueidentifier |

| BINARY | DECIMAL | decimal |

| BINARY | JSON | nvarchar(MAX) |

| BINARY | BSON | varbinary(max) |

| FIXED_LEN_BYTE_ARRAY | DECIMAL | decimal |

| BYTE_ARRAY | INTERVAL | varchar(max), |

| INT32 | INT(8, true) | smallint |

| INT32 | INT(16, true) | smallint |

| INT32 | INT(32, true) | int |

| INT32 | INT(8, false) | tinyint |

| INT32 | INT(16, false) | int |

| INT32 | INT(32, false) | bigint |

| INT32 | DATE | date |

| INT32 | DECIMAL | decimal |

| INT32 | TIME (MILLIS ) | time |

| INT64 | INT(64, true) | bigint |

| INT64 | INT(64, false ) | decimal(20,0) |

| INT64 | DECIMAL | decimal |

| INT64 | TIME (MILLIS) | time |

| INT64 | TIMESTAMP (MILLIS) | datetime2 |

| Complex type | LIST | varchar(max) |

| Complex type | MAP | varchar(max) |

Important

- SQL dedicated pools do not currently support Parquet data types with MICROS and NANOS precision.

- You may experience the following error if types are mismatched between Parquet and SQL or if you have unsupported Parquet data types:'HdfsBridge::recordReaderFillBuffer - Unexpected error encountered filling record reader buffer: ClassCastException: ...'

- Loading a value outside the range of 0-127 into a tinyint column for Parquet and ORC file format is not supported.

For an example of creating external objects, see Create external tables.

Elton John

Format text files

If you are using PolyBase, the external objects defined need to align the rows of the text files with the external table and file format definition. The data in each row of the text file must align with the table definition.To format the text files:

- If your data is coming from a non-relational source, you need to transform it into rows and columns. Whether the data is from a relational or non-relational source, the data must be transformed to align with the column definitions for the table into which you plan to load the data.

- Format data in the text file to align with the columns and data types in the destination table. Misalignment between data types in the external text files and the dedicated SQL pool table causes rows to be rejected during the load.

- Separate fields in the text file with a terminator. Be sure to use a character or a character sequence that isn't found in your source data. Use the terminator you specified with CREATE EXTERNAL FILE FORMAT.

4. Load the data using PolyBase or the COPY statement

Elton John Movie

It is best practice to load data into a staging table. Staging tables allow you to handle errors without interfering with the production tables. A staging table also gives you the opportunity to use the dedicated SQL pool parallel processing architecture for data transformations before inserting the data into production tables.

Options for loading

To load data, you can use any of these loading options:

- The COPY statement is the recommended loading utility as it enables you to seamlessly and flexibly load data. The statement has many additional loading capabilities that PolyBase does not provide.

- PolyBase with T-SQL requires you to define external data objects.

- PolyBase and COPY statement with Azure Data Factory (ADF) is another orchestration tool. It defines a pipeline and schedules jobs.

- PolyBase with SSIS works well when your source data is in SQL Server. SSIS defines the source to destination table mappings, and also orchestrates the load. If you already have SSIS packages, you can modify the packages to work with the new data warehouse destination.

- PolyBase with Azure Databricks transfers data from a table to a Databricks dataframe and/or writes data from a Databricks dataframe to a table using PolyBase.

Other loading options

In addition to PolyBase and the COPY statement, you can use bcp or the SqlBulkCopy API. bcp loads directly to the database without going through Azure Blob storage, and is intended only for small loads.

Note

The load performance of these options is slower than PolyBase and the COPY statement.

5. Transform the data

While data is in the staging table, perform transformations that your workload requires. Then move the data into a production table.

Eltiempo

6. Insert the data into production tables

The INSERT INTO ... SELECT statement moves the data from the staging table to the permanent table.

As you design an ETL process, try running the process on a small test sample. Try extracting 1000 rows from the table to a file, move it to Azure, and then try loading it into a staging table.

Partner loading solutions

Many of our partners have loading solutions. To find out more, see a list of our solution partners.

Elton John

Next steps

For loading guidance, see Guidance for loading data.

Thanks to the ELT's enormous main mirror, HIRES will be able to explore and characterise planets outside of our Solar System. After decades of detecting exoplanets, the focus is now on observing and quantifying their atmospheres; the ultimate goal is to detect signatures of life. The unprecedented capabilities of HIRES will enable astronomers to investigate the chemical composition, layers, and weather in the atmospheres of many different types of exo-planets, from Neptune-like to Earth-like, including those in stars' habitable zones. HIRES will also be able to observe forming protoplanets and their impact on the natal protoplanetary disc.

Eltamd

Moving much further from Earth, HIRES is likely to be the first instrument to unambiguously detect the fingerprints of the first generation of stars (“population III” stars) that lit up the primordial Universe. This will be achieved by measuring the relative abundance of various chemical elements in the intergalactic medium in the early Universe and by detecting the chemical enrichment pattern typical of the first supernova explosions.

Elt Battery

Beyond astronomy, HIRES will reach into the territory of fundamental physics. It will help astronomers determine whether some of the fundamental constants of physics, which regulate most physical processes in the Universe, could actually change with time or space. In particular, HIRES will provide the most accurate tests of the fine-structure constant and the electron-to-proton mass ratio. Furthermore, HIRES will be used to directly measure the acceleration of the Universe’s expansion; such a measurement would greatly impact our understanding of the Universe and its fate.